1、部署前准备

1.1、部署前规划

操作系统:Ubuntu 20.04

| 节点 | 主机名 | IP地址 |

| master1 | k8s-master1.kubeadm.com | 172.16.1.101 |

| master2 | k8s-master2.kubeadm.com | 172.16.1.102 |

| master3 | k8s-master3.kubeadm.com | 172.16.1.103 |

| ha1 | k8s-ha1.kubeadm.com | 172.16.1.104 |

| ha2 | k8s-ha2.kubeadm.com | 172.16.1.105 |

| harbor1 | k8s-harbor1.kubeadm.com | 172.16.1.106 |

| node1 | k8s-node1.kubeadm.com | 172.16.1.107 |

| node2 | k8s-node2.kubeadm.com | 172.16.1.108 |

| node3 | k8s-node3.kubeadm.com | 172.16.1.109 |

1.2、部署前注意事项

禁⽤swap分区、关闭selinux、关闭防火墙

优化内核参数及资源限制参数

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

#⼆层的⽹桥在转发包时会被宿主机iptables的FORWARD规则匹配2、部署步骤

2.1、部署⾼可⽤反向代理-HAproxy和Keepalived

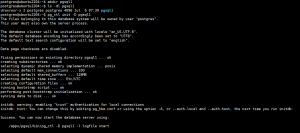

2.1.1、节点1安装keepalived

#使用apt安装keepalived

root@k8s-ha1:~# apt update

root@k8s-ha1:~# apt install -y keepalived

#查找keepalived配制模板并复制生成/etc/keepalived/keepalived.conf

root@k8s-ha1:~# find / -name keepalived*

root@k8s-ha1:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

#编辑配置文件,配置VIP 172.16.1.110

root@k8s-ha1:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 100

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.1.110 label eth0:1

}

}

#重启服务并设置开机自启

root@k8s-ha1:~# systemctl restart keepalived.service

root@k8s-ha1:~# systemctl enable keepalived.service

#查看VIP是否生成

root@k8s-ha1:~# ip a![图片[1]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-15.png)

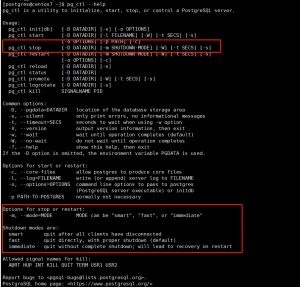

2.1.2、节点2安装keepalived

root@k8s-ha2:~# apt update

root@k8s-ha2:~# apt install -y keepalived

root@k8s-ha2:~# find / -name keepalived*

root@k8s-ha2:~# cp /usr/share/doc/keepalived/samples/keepalived.conf.vrrp /etc/keepalived/keepalived.conf

#编辑配置文件,配置VIP 172.16.1.110,修改优先级priority,值越高越优先

root@k8s-ha2:~# vim /etc/keepalived/keepalived.conf

vrrp_instance VI_1 {

state MASTER

interface eth0

garp_master_delay 10

smtp_alert

virtual_router_id 51

priority 99

advert_int 1

authentication {

auth_type PASS

auth_pass 1111

}

virtual_ipaddress {

172.16.1.110 label eth0:1

}

}

root@k8s-ha2:~# systemctl restart keepalived.service

root@k8s-ha2:~# systemctl enable keepalived.service

#停止节点一的服务后,查看节点二VIP是否生成

root@k8s-ha1:~# systemctl stop keepalived.service

root@k8s-ha2:~# ip a![图片[2]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-16.png)

2.1.3、节点1安装HAproxy

#使用apt安装HAproxy

root@k8s-ha1:~# apt install -y haproxy

#编辑配置文件,配置listen

root@k8s-ha1:~# vim /etc/haproxy/haproxy.cfg

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:q1w2e3r4ys

listen k8s-apiserver:6443

bind 172.16.1.110:6443

mode tcp

server 172.16.1.101 172.16.1.101:6443 check inter 3s fall 3 rise 5

server 172.16.1.102 172.16.1.102:6443 check inter 3s fall 3 rise 5

server 172.16.1.103 172.16.1.103:6443 check inter 3s fall 3 rise 5

#重启服务并设置开机自启

root@k8s-ha1:~# systemctl restart haproxy.service ;systemctl enable haproxy2.1.4、节点2安装HAproxy

root@k8s-ha2:~# apt install -y haproxy

root@k8s-ha2:~# vim /etc/haproxy/haproxy.cfg

listen stats

mode http

bind 0.0.0.0:9999

stats enable

log global

stats uri /haproxy-status

stats auth haadmin:q1w2e3r4ys

listen k8s-apiserver:6443

bind 172.16.1.110:6443

mode tcp

server 172.16.1.101 172.16.1.101:6443 check inter 3s fall 3 rise 5

server 172.16.1.102 172.16.1.102:6443 check inter 3s fall 3 rise 5

server 172.16.1.103 172.16.1.103:6443 check inter 3s fall 3 rise 5

root@k8s-ha2:~# systemctl restart haproxy.service ;systemctl enable haproxy2.2、部署docker镜像仓库-harbor

后续更新

2.3、安装kubeadm等组件

在三个master和三个node节点安装kubeadm 、kubelet、kubectl、docker等组件,负载均衡服务器不需要安装。

2.3.1、安装docker

所有节点安装docker

#安装必要的⼀些系统⼯具

sudo apt-get update

apt -y install apt-transport-https ca-certificates curl software-properties-common

#安装GPG证书

curl -fsSL http://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add -

#写⼊软件源信息

sudo add-apt-repository "deb [arch=amd64] http://mirrors.aliyun.com/dockerce/linux/ubuntu $(lsb_release -cs) stable

#更新软件源

apt-get -y update

#查看可安装的Docker版本

apt-cache madison docker-ce docker-ce-cli

#安装并启动docker 19.03.8:

apt install -y docker-ce=5:19.03.15~3-0~ubuntu-focal docker-ce-cli=5:19.03.15~3-0~ubuntu-focal

systemctl start docker && systemctl enable docker

#验证docker版本

docker version

#修改service文件

vim /usr/lib/systemd/system/docker.service

ExecStart=/usr/bin/dockerd -H fd:// --containerd=/run/containerd/containerd.sock --insecure-registry=k8s-harbor1.kubeadm.com

#重新加载service文件并重启服务

systemctl daemon-reload

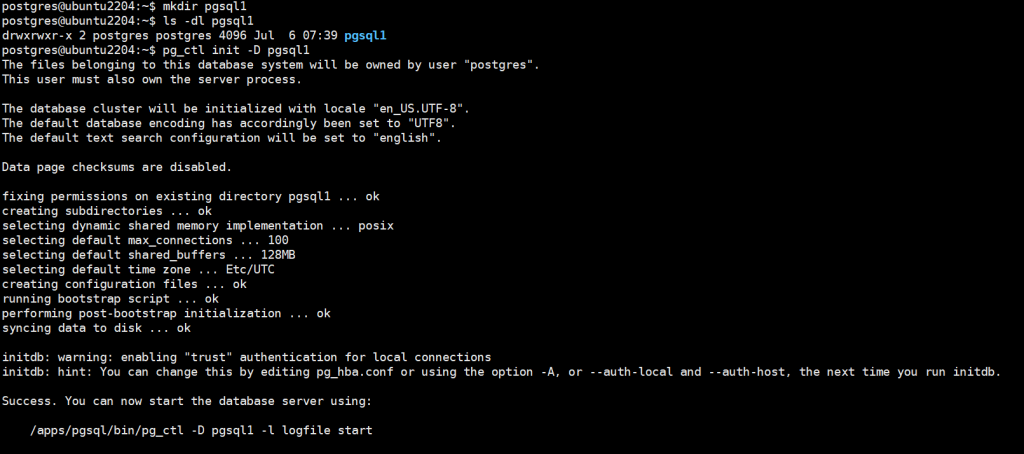

systemctl restart docker.service2.3.2、所有节点安装kubelet kubeadm kubectl

使⽤阿⾥的kubernetes镜像源

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add

-

cat <<EOF >/etc/apt/sources.list.d/kubernetes.list

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

EOF#开始安装kubeadm,查看kubeadm版本:

apt-get update

apt-cache madison kubeadm

#所有master节点

apt-get install kubelet=1.20.5-00 kubeadm=1.20.5-00 kubectl=1.20.5-00

#所有node节点

apt-get install kubelet=1.20.5-00 kubeadm=1.20.5-00

#验证版本

kubeadm version![图片[3]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-17.png)

2.3.3、准备镜像

#查看该版本所需镜像

root@k8s-master1:~# kubeadm config images list --kubernetes-version v1.20.5

k8s.gcr.io/kube-apiserver:v1.20.5

k8s.gcr.io/kube-controller-manager:v1.20.5

k8s.gcr.io/kube-scheduler:v1.20.5

k8s.gcr.io/kube-proxy:v1.20.5

k8s.gcr.io/pause:3.2

k8s.gcr.io/etcd:3.4.13-0

k8s.gcr.io/coredns:1.7.0

#更改为国内源下载镜像

root@k8s-master1:~# vim imagesdown.sh

#!/bin/bash

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.20.5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

root@k8s-master1:~# bash imagesdown.sh

#查看镜像

root@k8s-master1:~# docker images![图片[4]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-18.png)

2.4、初始化

kubeadm init --apiserver-advertise-address=172.16.1.101 --control-plane-endpoint=172.16.1.110 --apiserver-bind-port=6443 --kubernetes-version=v1.20.5 --pod-network-cidr=10.100.0.0/16 --service-cidr=10.200.0.0/16 --service-dns-domain=kubeadm.local --image-repository=registry.cn-hangzhou.aliyuncs.com/google_containers --ignore-preflight-errors=swapYour Kubernetes control-plane has initialized successfully!

#按提示输入命令,修改环境变量

To start using your cluster, you need to run the following as a regular user:

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

Alternatively, if you are the root user, you can run:

export KUBECONFIG=/etc/kubernetes/admin.conf

#用于部署网络组件

You should now deploy a pod network to the cluster.

Run "kubectl apply -f [podnetwork].yaml" with one of the options listed at:

https://kubernetes.io/docs/concepts/cluster-administration/addons/

You can now join any number of control-plane nodes by copying certificate authorities

and service account keys on each node and then running the following as root:

#用于将其它master节点加入集群

kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 \

--control-plane

Then you can join any number of worker nodes by running the following on each as root:

#用于将其它node节点加入集群

kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 ![图片[5]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-25.png)

#按提示输入命令

root@k8s-master1:~# mkdir -p $HOME/.kube

root@k8s-master1:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master1:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config

root@k8s-master1:~# export KUBECONFIG=/etc/kubernetes/admin.conf2.5、部署网络组件

#下载flannel的安装yml文件

root@k8s-master1:~# wget https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

#修改yml文件中net-conf网络为初始化时--pod-network-cidr所配置的网络

root@k8s-master1:~# vim kube-flannel.yml

net-conf.json: |

{

"Network": "10.100.0.0/16",

"Backend": {

"Type": "vxlan"

}

}

#使用yml新建pod,并查看

root@k8s-master1:~# kubectl apply -f kube-flannel.yml

root@k8s-master1:~# kubectl get pod -A![图片[6]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-26.png)

2.6、添加master节点

#在master1上⽣成证书⽤于添加新控制节点

root@k8s-master1:~# kubeadm init phase upload-certs --upload-certs

I1031 10:34:16.110069 9176 version.go:254] remote version is much newer: v1.25.3; falling back to: stable-1.20

[upload-certs] Storing the certificates in Secret "kubeadm-certs" in the "kube-system" Namespace

[upload-certs] Using certificate key:

6896fc25c1bac1d4c2d945077616329e4965f2f76e7f95b7dec4d0af893c5e5d#将master2加入k8s集群

root@k8s-master2:~# kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 \

--control-plane --certificate-key 6896fc25c1bac1d4c2d945077616329e4965f2f76e7f95b7dec4d0af893c5e5d

root@k8s-master2:~# mkdir -p $HOME/.kube

root@k8s-master2:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master2:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config#将master3加入k8s集群

root@k8s-master3:~# kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 \

--control-plane --certificate-key 6896fc25c1bac1d4c2d945077616329e4965f2f76e7f95b7dec4d0af893c5e5d

root@k8s-master3:~# mkdir -p $HOME/.kube

root@k8s-master3:~# sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

root@k8s-master3:~# sudo chown $(id -u):$(id -g) $HOME/.kube/config#在master1上查看pod和node状态

root@k8s-master1:~# kubectl get pod -A

root@k8s-master1:~# kubectl get node -A![图片[7]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-27.png)

2.7、添加node节点

#将node1加入k8s集群

root@k8s-node1:~# kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 #将node2加入k8s集群

root@k8s-node2:~# kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 #将node3加入k8s集群

root@k8s-node3:~# kubeadm join 172.16.1.110:6443 --token urzttq.t4y91pkq3v5dc7ki \

--discovery-token-ca-cert-hash sha256:a8c9a454962544e1a2f09073947adfa942abaa245bc44ecebca31175276ac047 #在master1上查看pod和node状态

root@k8s-master1:~# kubectl get pod -A -o wide

root@k8s-master1:~# kubectl get node -A -o wide![图片[8]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-28.png)

2.8、运行pod测试网络环境

#运行镜像为alpine的pod

root@k8s-master1:~# kubectl run test1 --image=alpine sleep 40000

pod/test1 created

root@k8s-master1:~# kubectl run test2 --image=alpine sleep 40000

pod/test2 created

root@k8s-master1:~# kubectl run test3 --image=alpine sleep 40000

pod/test3 created

root@k8s-master1:~# kubectl run test4 --image=alpine sleep 40000

pod/test4 created

root@k8s-master1:~# kubectl run test5 --image=alpine sleep 40000

pod/test5 created

root@k8s-master1:~# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

test1 1/1 Running 0 41s 10.100.5.2 k8s-node3.kubeadm.com <none> <none>

test2 1/1 Running 0 37s 10.100.3.2 k8s-node1.kubeadm.com <none> <none>

test3 1/1 Running 0 32s 10.100.4.2 k8s-node2.kubeadm.com <none> <none>

test4 1/1 Running 0 29s 10.100.5.3 k8s-node3.kubeadm.com <none> <none>

test5 1/1 Running 0 26s 10.100.4.3 k8s-node2.kubeadm.com <none> <none>

#进入pod test1中测试网络

root@k8s-master1:~# kubectl exec -it test1 sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

/ # ping 10.100.5.3

/ # ping 10.100.3.2

/ # ping 10.100.4.2

/ # ping www.baidu.com![图片[9]-kubeadm部署高可用kubernetes 1.20.5-李佳程的个人主页](http://39.101.72.1/wp-content/uploads/2022/10/image-29.png)

© 版权声明

文章版权归作者所有,未经允许请勿转载。

THE END