RBD(RADOS Block Devices)即为块存储的一种,RBD 通过 librbd 库与 OSD 进行交互,RBD 为KVM 等虚拟化技术和云服务(如 OpenStack 和 CloudStack)提供高性能和无限可扩展性的存储后端,这些系统依赖于 libvirt 和 QEMU 实用程序与 RBD 进行集成,客户端基于 librbd 库即可将 RADOS 存储集群用作块设备,不过,用于 rbd 的存储池需要事先启用 rbd 功能并进行初始化。

1、创建 RBD

# 创建存储池命令格式:

ceph osd pool create <poolname> pg_num pgp_num {replicated|erasure}

# 创建存储池,指定 pg 和 pgp 的数量,pgp 用于在 pg 故障

时归档 pg 的数据,因此 pgp 通常等于 pg 的值

[ceph@deploy ceph-cluster]$ ceph osd pool create myrdb1 64 64

pool 'myrdb1' created

# 对存储池启用 RBD 功能

[ceph@deploy ceph-cluster]$ ceph osd pool application enable myrdb1 rdb

enabled application 'rdb' on pool 'myrdb1'

# 通过 RBD 命令对存储池初始化

[ceph@deploy ceph-cluster]$ rbd pool init -p myrdb1

rbd: pool already registered to a different application.

2、创建并验证 img

rbd 存储池并不能直接用于块设备,而是需要事先在其中按需创建映像(image),并把映像文件作为块设备使用,rbd 命令可用于创建、查看及删除块设备相在的映像(image),以及克隆映像、创建快照、将映像回滚到快照和查看快照等管理操作。

# 后续步骤由于 centos 内核较低无法挂载使用,因此只开启部分特性。

除了 layering 其他特性需要高版本内核支持

[ceph@deploy ceph-cluster]$ rbd create myimg1 --size 5G --pool myrdb1

[ceph@deploy ceph-cluster]$ rbd create myimg2 --size 1G --pool myrdb1 --image-format 2 --image-feature layering

# 列出指定的pool中所有的img

[ceph@deploy ceph-cluster]$ rbd ls --pool myrdb1

myimg1

myimg2

# 查看指定 rdb 的信息

[ceph@deploy ceph-cluster]$ rbd --image myimg1 --pool myrdb1 info

rbd image 'myimg1':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

id: 11016b8b4567

block_name_prefix: rbd_data.11016b8b4567

format: 2

features: layering, exclusive-lock, object-map, fast-diff, deep-flatten

op_features:

flags:

create_timestamp: Fri Jan 6 14:44:35 2023

[ceph@deploy ceph-cluster]$ rbd --image myimg2 --pool myrdb1 info

rbd image 'myimg2':

size 1 GiB in 256 objects

order 22 (4 MiB objects)

id: 11076b8b4567

block_name_prefix: rbd_data.11076b8b4567

format: 2

features: layering

op_features:

flags:

create_timestamp: Fri Jan 6 14:45:56 2023

3、客户端使用块存储

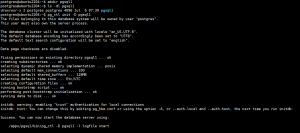

3.1、当前ceph状态

[ceph@deploy ceph-cluster]$ ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

1.5 TiB 1.4 TiB 16 GiB 1.07

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

mypool 1 0 B 0 469 GiB 0

myrdb1 2 0 B 0 469 GiB 03.2、在客户端安装 ceph-common

[root@client ~]# yum install https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/noarch/ceph-release-1-1.el7.noarch.rpm

[root@client ~]# yum install epel-release

[root@client ~]# yum install -y ceph-common

# 从部署服务器同步认证文件

[ceph@deploy ceph-cluster]$ scp ceph.conf ceph.client.admin.keyring root@192.168.1.11:/etc/ceph/

3.3、客户端映射 img

[root@client ceph]# rbd -p myrdb1 map myimg1

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable myrdb1/myimg1 object-map fast-diff deep-flatten".

In some cases useful info is found in syslog - try "dmesg | tail".

rbd: map failed: (6) No such device or address

[root@client ceph]# rbd -p myrdb1 map myimg2

/dev/rbd0

3.4、客户端验证 RBD

[root@client ceph]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sr0 11:0 1 4.4G 0 rom

rbd0 252:0 0 1G 0 disk

[root@client ceph]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 1073 MB, 1073741824 bytes, 2097152 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes3.5、客户端格式化磁盘并挂载使用

[root@client ceph]# mkfs.xfs /dev/rbd0

Discarding blocks...Done.

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=32768 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=262144, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@client ceph]# mkdir /data

[root@client ceph]# mount /dev/rbd0 /data

[root@client ceph]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 19G 2.4G 16G 13% /

/dev/sda1 xfs 1.1G 158M 906M 15% /boot

tmpfs tmpfs 396M 0 396M 0% /run/user/0

/dev/rbd0 xfs 1.1G 34M 1.1G 4% /data

[root@client ceph]# cp /etc/passwd /data/

[root@client ceph]# ll /data/

total 4

-rw-r--r-- 1 root root 1123 Jan 6 15:18 passwd

3.6、客户端验证

[root@client ceph]# dd if=/dev/zero of=/data/ceph-test-file bs=1MB count=300

300+0 records in

300+0 records out

300000000 bytes (300 MB) copied, 0.742299 s, 404 MB/s

[root@client ceph]# ll -h /data/ceph-test-file

-rw-r--r-- 1 root root 287M Jan 6 15:19 /data/ceph-test-file

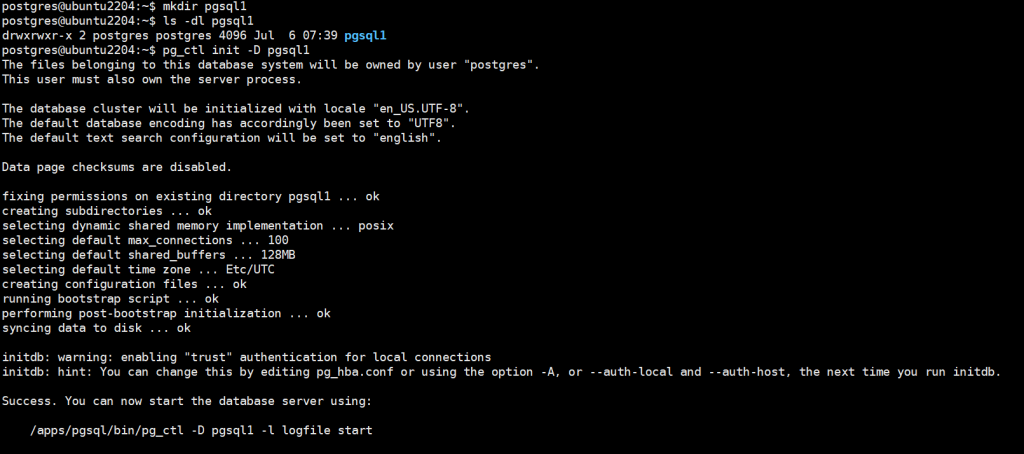

3.7、ceph 验证数据

[ceph@deploy ceph-cluster]$ ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

1.5 TiB 1.4 TiB 16 GiB 1.07

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

mypool 1 0 B 0 469 GiB 0

myrdb1 2 300 MiB 0.06 469 GiB 91© 版权声明

文章版权归作者所有,未经允许请勿转载。

THE END