1、RBD 架构图

Ceph 可以同时提供对象存储 RADOSGW、块存储 RBD、文件系统存储 Ceph FS,RBD 即 RADOS Block Device 的简称,RBD 块存储是最稳定且最常用的存储类型,RBD 块设备类似磁盘可以被挂载,RBD 块设备具有快照、多副本、克隆和一致性等特性,数据以条带化的方式存储在Ceph 集群的多个 OSD 中。

条带化技术就是一种自动的将 I/O 的负载均衡到多个物理磁盘上的技术,条带化技术就是将一块连续的数据分成很多小部分并把他们分别存储到不同磁盘上去。这就能使多个进程同时访问数据的多个不同部分而不会造成磁盘冲突,而且在需要对这种数据进行顺序访问的时候可以获得最大程度上的 I/O 并行能力,从而获得非常好的性能。

![图片[1]-Ceph RBD 使用-李佳程的个人主页](http://www.lijiach.com/wp-content/uploads/2023/01/image-171.png)

2、创建存储池

# 创建存储池

[ceph@deploy ceph-cluster]$ ceph osd pool create rbd-data1 32 32

pool 'rbd-data1' created

# 验证存储池

[ceph@deploy ceph-cluster]$ ceph osd pool ls

mypool

myrdb2

.rgw.root

default.rgw.control

default.rgw.meta

default.rgw.log

cephfs-metadata

cephfs-data

rbd-data1

# 在存储池启用 rbd

[ceph@deploy ceph-cluster]$ ceph osd pool application enable rbd-data1 rbd

enabled application 'rbd' on pool 'rbd-data1'

# 初始化 rbd

[ceph@deploy ceph-cluster]$ rbd pool init -p rbd-data1

3、创建 img 镜像

rbd 存储池并不能直接用于块设备,而是需要事先在其中按需创建映像(image),并把映像文件作为块设备使用。rbd 命令可用于创建、查看及删除块设备相在的映像(image),以及克隆映像、创建快照、将映像回滚到快照和查看快照等管理操作。

例如,下面的命令能够在指定的 RBD 即 rbd-data1 创建一个名为 myimg1 的映像。

命令格式

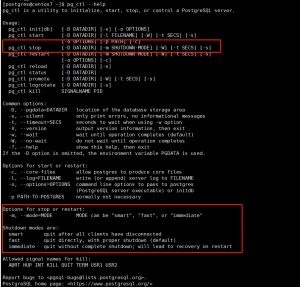

[ceph@deploy ceph-cluster]$ rbd help create

usage: rbd create [--pool <pool>] [--image <image>]

[--image-format <image-format>] [--new-format]

[--order <order>] [--object-size <object-size>]

[--image-feature <image-feature>] [--image-shared]

[--stripe-unit <stripe-unit>]

[--stripe-count <stripe-count>] [--data-pool <data-pool>]

[--journal-splay-width <journal-splay-width>]

[--journal-object-size <journal-object-size>]

[--journal-pool <journal-pool>]

[--thick-provision] --size <size> [--no-progress]

<image-spec>

Create an empty image.

Positional arguments

<image-spec> image specification

(example: [<pool-name>/]<image-name>)

Optional arguments

-p [ --pool ] arg pool name

--image arg image name

--image-format arg image format [1 (deprecated) or 2]

--new-format use image format 2

(deprecated)

--order arg object order [12 <= order <= 25]

--object-size arg object size in B/K/M [4K <= object size <= 32M]

--image-feature arg image features

[layering(+), exclusive-lock(+*), object-map(+*),

fast-diff(+*), deep-flatten(+-), journaling(*)]

--image-shared shared image

--stripe-unit arg stripe unit in B/K/M

--stripe-count arg stripe count

--data-pool arg data pool

--journal-splay-width arg number of active journal objects

--journal-object-size arg size of journal objects [4K <= size <= 64M]

--journal-pool arg pool for journal objects

--thick-provision fully allocate storage and zero image

-s [ --size ] arg image size (in M/G/T) [default: M]

--no-progress disable progress output

Image Features:

(*) supports enabling/disabling on existing images

(-) supports disabling-only on existing images

(+) enabled by default for new images if features not specified创建镜像

# 创建两个镜像

[ceph@deploy ceph-cluster]$ rbd create data-img1 --size 3G --pool rbd-data1 --image-format 2 --image-feature layering

[ceph@deploy ceph-cluster]$ rbd create data-img2 --size 5G --pool rbd-data1 --image-format 2 --image-feature layering

# 验证镜像

[ceph@deploy ceph-cluster]$ rbd ls --pool rbd-data1

data-img1

data-img2

# 列出镜像信息

[ceph@deploy ceph-cluster]$ rbd ls --pool rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2

data-img2 5 GiB 2 查看镜像详细信息

[ceph@deploy ceph-cluster]$ rbd --image data-img2 --pool rbd-data1 info

rbd image 'data-img2':

size 5 GiB in 1280 objects

order 22 (4 MiB objects)

id: 15d56b8b4567

block_name_prefix: rbd_data.15d56b8b4567

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Jan 9 14:16:23 2023

[ceph@deploy ceph-cluster]$ rbd --image data-img1 --pool rbd-data1 info

rbd image 'data-img1':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

id: 15cf6b8b4567

block_name_prefix: rbd_data.15cf6b8b4567

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Jan 9 14:16:08 2023

以 json 格式显示镜像信息

[ceph@deploy ceph-cluster]$ rbd ls --pool rbd-data1 -l --format json --pretty-format

[

{

"image": "data-img1",

"size": 3221225472,

"format": 2

},

{

"image": "data-img2",

"size": 5368709120,

"format": 2

}

]

镜像的其他特性

[ceph@deploy ceph-cluster]$ rbd help feature enable

usage: rbd feature enable [--pool <pool>] [--image <image>]

[--journal-splay-width <journal-splay-width>]

[--journal-object-size <journal-object-size>]

[--journal-pool <journal-pool>]

<image-spec> <features> [<features> ...]

Enable the specified image feature.

Positional arguments

<image-spec> image specification

(example: [<pool-name>/]<image-name>)

<features> image features

[exclusive-lock, object-map, fast-diff, journaling]

Optional arguments

-p [ --pool ] arg pool name

--image arg image name

--journal-splay-width arg number of active journal objects

--journal-object-size arg size of journal objects [4K <= size <= 64M]

--journal-pool arg pool for journal objectslayering: 支持镜像分层快照特性,用于快照及写时复制,可以对 image 创建快照并保护,然

后从快照克隆出新的 image 出来,父子 image 之间采用 COW 技术,共享对象数据。

striping: 支持条带化 v2,类似 raid 0,只不过在 ceph 环境中的数据被分散到不同的对象中,

可改善顺序读写场景较多情况下的性能。

exclusive-lock: 支持独占锁,限制一个镜像只能被一个客户端使用。

object-map: 支持对象映射(依赖 exclusive-lock),加速数据导入导出及已用空间统计等,此特

性开启的时候,会记录 image 所有对象的一个位图,用以标记对象是否真的存在,在一些场

景下可以加速 io。

fast-diff: 快速计算镜像与快照数据差异对比(依赖 object-map)。

deep-flatten: 支持快照扁平化操作,用于快照管理时解决快照依赖关系等。

journaling: 修改数据是否记录日志,该特性可以通过记录日志并通过日志恢复数据(依赖独

占锁),开启此特性会增加系统磁盘 IO 使用。

jewel 默认开启的特性包括: layering/exlcusive lock/object map/fast diff/deepflatten镜像特性的启用

# 验证镜像特性

[ceph@deploy ceph-cluster]$ rbd --image data-img1 --pool rbd-data1 info

rbd image 'data-img1':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

id: 15cf6b8b4567

block_name_prefix: rbd_data.15cf6b8b4567

format: 2

features: layering

op_features:

flags:

create_timestamp: Mon Jan 9 14:16:08 2023

# 启用指定存储池中的指定镜像的特性

[ceph@deploy ceph-cluster]$ rbd feature enable exclusive-lock --pool rbd-data1 --image data-img1

[ceph@deploy ceph-cluster]$ rbd feature enable object-map --pool rbd-data1 --image data-img1

[ceph@deploy ceph-cluster]$ rbd feature enable fast-diff --pool rbd-data1 --image data-img1

# 再次验证镜像特性

[ceph@deploy ceph-cluster]$ rbd --image data-img1 --pool rbd-data1 info

rbd image 'data-img1':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

id: 15cf6b8b4567

block_name_prefix: rbd_data.15cf6b8b4567

format: 2

features: layering, exclusive-lock, object-map, fast-diff

op_features:

flags: object map invalid, fast diff invalid

create_timestamp: Mon Jan 9 14:16:08 2023镜像特性的禁用

# 禁用指定存储池中指定镜像的特性

[ceph@deploy ceph-cluster]$ rbd feature disable fast-diff --pool rbd-data1 --image data-img1

# 验证镜像特性

[ceph@deploy ceph-cluster]$ rbd --image data-img1 --pool rbd-data1 info

rbd image 'data-img1':

size 3 GiB in 768 objects

order 22 (4 MiB objects)

id: 15cf6b8b4567

block_name_prefix: rbd_data.15cf6b8b4567

format: 2

features: layering, exclusive-lock, object-map

op_features:

flags: object map invalid

create_timestamp: Mon Jan 9 14:16:08 20234、配置客户端使用 RBD

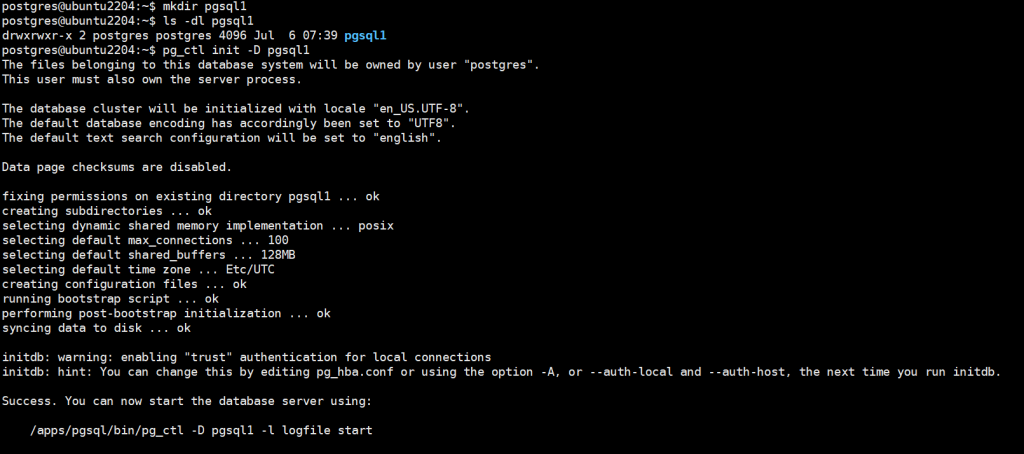

在 centos 客户端挂载 RBD,并分别使用 admin 及普通用户挂载 RBD 并验证使用。

客户端配置 yum 源:

客户端要想挂载使用 ceph RBD,需要安装 ceph 客户端组件 ceph-common,但是 ceph-common不在 centos 的 yum 仓库,因此需要单独配置 yum 源。

[root@ceph-client ~]# yum install -y https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/noarch/ceph-release-1-1.el7.noarch.rpm

[root@ceph-client ~]# yum install -y epel-release客户端安装 ceph-common:

[root@ceph-client ~]# yum install -y ceph-common

4.1、客户端使用 admin 账户挂载并使用 RBD:

# 同步 admin 账户认证文件

[ceph@deploy ceph-cluster]$ scp ceph.conf ceph.client.admin.keyring root@192.168.1.11:/etc/ceph/

# 客户端映射镜像

[root@ceph-client ~]# rbd -p rbd-data1 map data-img1

rbd: sysfs write failed

RBD image feature set mismatch. You can disable features unsupported by the kernel with "rbd feature disable rbd-data1/data-img1 object-map".

In some cases useful info is found in syslog - try "dmesg | tail".

rbd: map failed: (6) No such device or address

# 部分特性不支持,需要在 ceph 管理端关闭特性

object-map

# 管理端关闭 img data-img1 特性 object-map

[ceph@deploy ceph-cluster]$ rbd feature disable object-map --pool rbd-data1 --image data-img1

[root@ceph-client ~]# rbd -p rbd-data1 map data-img1

/dev/rbd0

[root@ceph-client ~]# rbd -p rbd-data1 map data-img2

/dev/rbd1客户端验证镜像:

[root@ceph-client ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk

├─sda1 8:1 0 1G 0 part /boot

└─sda2 8:2 0 19G 0 part

├─centos-root 253:0 0 17G 0 lvm /

└─centos-swap 253:1 0 2G 0 lvm [SWAP]

sr0 11:0 1 4.4G 0 rom

rbd0 252:0 0 3G 0 disk

rbd1 252:16 0 5G 0 disk客户端格式化磁盘并挂载使用:

[root@ceph-client ~]# mkfs.xfs /dev/rbd0

Discarding blocks...Done.

meta-data=/dev/rbd0 isize=512 agcount=8, agsize=98304 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=786432, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ceph-client ~]# mkfs.xfs /dev/rbd1

Discarding blocks...Done.

meta-data=/dev/rbd1 isize=512 agcount=8, agsize=163840 blks

= sectsz=512 attr=2, projid32bit=1

= crc=1 finobt=0, sparse=0

data = bsize=4096 blocks=1310720, imaxpct=25

= sunit=1024 swidth=1024 blks

naming =version 2 bsize=4096 ascii-ci=0 ftype=1

log =internal log bsize=4096 blocks=2560, version=2

= sectsz=512 sunit=8 blks, lazy-count=1

realtime =none extsz=4096 blocks=0, rtextents=0

[root@ceph-client ~]# mkdir /data0

[root@ceph-client ~]# mkdir /data1

[root@ceph-client ~]# mount /dev/rbd0 /data0

[root@ceph-client ~]# mount /dev/rbd1 /data1

[root@ceph-client ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 19G 2.4G 16G 13% /

/dev/sda1 xfs 1.1G 158M 906M 15% /boot

tmpfs tmpfs 396M 0 396M 0% /run/user/0

/dev/rbd0 xfs 3.3G 34M 3.2G 2% /data0

/dev/rbd1 xfs 5.4G 34M 5.4G 1% /data1

客户端验证写入数据:

安装 docker 并创建 mysql 容器,验证容器数据能否写入 rbd 挂载的路径/data

# 安装 docker

https://mirrors.tuna.tsinghua.edu.cn/help/docker-ce/

[root@ceph-client ~]# yum install -y yum-utils device-mapper-persistent-data lvm2

[root@ceph-client ~]# yum-config-manager --add-repo https://download.docker.com/linux/centos/docker-ce.repo

[root@ceph-client ~]# sudo sed -i 's+download.docker.com+mirrors.tuna.tsinghua.edu.cn/docker-ce+' /etc/yum.repos.d/docker-ce.repo

[root@ceph-client ~]# yum install -y docker-ce

[root@ceph-client ~]# systemctl enable --now docker.service

[root@ceph-client ~]# docker run -it -d -p 3306:3306 -e MYSQL_ROOT_PASSWORD=123456 -v /data0:/var/lib/mysql mysql:5.6.46

52fbc073bc5ff0a27dd255e224ed26499e54e0f9691cb0f906cb83a2eee64603

[root@ceph-client ~]# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

52fbc073bc5f mysql:5.6.46 "docker-entrypoint.s…" 7 seconds ago Up 7 seconds 0.0.0.0:3306->3306/tcp, :::3306->3306/tcp xenodochial_gould

# 验证数据

[root@ceph-client ~]# ll /data0

total 110604

-rw-rw---- 1 polkitd input 56 Jan 9 14:54 auto.cnf

-rw-rw---- 1 polkitd input 12582912 Jan 9 14:54 ibdata1

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile0

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile1

drwx------ 2 polkitd input 4096 Jan 9 14:54 mysql

drwx------ 2 polkitd input 4096 Jan 9 14:54 performance_schema

查看存储池空间:

[ceph@deploy ceph-cluster]$ ceph df

GLOBAL:

SIZE AVAIL RAW USED %RAW USED

1.5 TiB 1.4 TiB 17 GiB 1.10

POOLS:

NAME ID USED %USED MAX AVAIL OBJECTS

mypool 1 962 B 0 469 GiB 2

myrdb2 2 300 MiB 0.06 469 GiB 91

.rgw.root 3 1.1 KiB 0 469 GiB 4

default.rgw.control 4 0 B 0 469 GiB 8

default.rgw.meta 5 0 B 0 469 GiB 0

default.rgw.log 6 0 B 0 469 GiB 175

cephfs-metadata 7 7.5 KiB 0 469 GiB 22

cephfs-data 8 1004 KiB 0 469 GiB 1

rbd-data1 10 152 MiB 0.03 469 GiB 59 4.2、客户端使用普通账户挂载并使用 RBD

创建普通账户并授权:

[ceph@deploy ceph-cluster]$ ceph auth add client.user1 mon 'allow r' osd 'allow rwx pool=rbd-data1'

added key for client.user1

[ceph@deploy ceph-cluster]$ ceph auth get client.user1

exported keyring for client.user1

[client.user1]

key = AQAru7tjKbEQNRAAwmb+0hnxray9Os+pfgX0JQ==

caps mon = "allow r"

caps osd = "allow rwx pool=rbd-data1"

[ceph@deploy ceph-cluster]$ ceph-authtool --create-keyring ceph.client.user1.keyring

creating ceph.client.user1.keyring

[ceph@deploy ceph-cluster]$ ceph auth get client.user1 -o ceph.client.user1.keyring

exported keyring for client.user1

[ceph@deploy ceph-cluster]$ cat ceph.client.user1.keyring

[client.user1]

key = AQAru7tjKbEQNRAAwmb+0hnxray9Os+pfgX0JQ==

caps mon = "allow r"

caps osd = "allow rwx pool=rbd-data1"

安装 ceph 客户端:

[root@ceph-client ~]# yum install -y https://mirrors.tuna.tsinghua.edu.cn/ceph/rpm-mimic/el7/noarch/ceph-release-1-1.el7.noarch.rpm

[root@ceph-client ~]# yum install -y epel-release在客户端验证权限:

[ceph@deploy ceph-cluster]$ scp ceph.conf ceph.client.user1.keyring root@192.168.1.12:/etc/ceph/

[root@ceph-client2 ~]# cd /etc/ceph/

[root@ceph-client2 ceph]# ll

total 12

-rw------- 1 root root 124 Jan 9 15:05 ceph.client.user1.keyring

-rw-r--r-- 1 root root 262 Jan 9 15:05 ceph.conf

-rw-r--r-- 1 root root 92 Apr 24 2020 rbdmap

[root@ceph-client2 ceph]# ceph --user user1 -s

cluster:

id: 845224fe-1461-48a4-884b-99b7b6327ae9

health: HEALTH_WARN

application not enabled on 1 pool(s)

1 pools have pg_num > pgp_num

services:

mon: 3 daemons, quorum mon01,mon02,mon03

mgr: mgr01(active), standbys: mgr02

mds: mycephfs-1/1/1 up {0=mgr01=up:active}

osd: 15 osds: 15 up, 15 in

data:

pools: 9 pools, 288 pgs

objects: 362 objects, 453 MiB

usage: 17 GiB used, 1.4 TiB / 1.5 TiB avail

pgs: 288 active+clean

映射 rbd:

[root@ceph-client2 ceph]# rbd --user user1 -p rbd-data1 map data-img2

/dev/rbd0

[root@ceph-client2 ceph]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 5368 MB, 5368709120 bytes, 10485760 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

格式化并使用 rbd 镜像:

[root@ceph-client2 ceph]# mkfs.ext4 /dev/rbd0

[root@ceph-client2 /]# mkdir /data

[root@ceph-client2 /]# mount /dev/rbd0 /data/

[root@ceph-client2 /]# cp /var/log/messages /data/

[root@ceph-client2 /]# ll /data/

total 1164

drwx------ 2 root root 16384 Jan 9 15:09 lost+found

-rw------- 1 root root 1173865 Jan 9 15:10 messages

[root@ceph-client2 /]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 19G 2.4G 16G 13% /

/dev/sda1 xfs 1.1G 158M 906M 15% /boot

tmpfs tmpfs 396M 0 396M 0% /run/user/0

/dev/rbd0 ext4 5.2G 23M 4.9G 1% /data

# 管理端验证镜像状态

[ceph@deploy ceph-cluster]$ rbd ls -p rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2 excl #施加锁文件,已经被客户端映射

data-img2 5 GiB 2 4.3、rbd 镜像空间拉伸

可以扩展空间,不建议缩小空间

[ceph@deploy ceph-cluster]$ rbd ls -p rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2 excl

data-img2 5 GiB 2

# rbd 镜像空间拉伸命令

[ceph@deploy ceph-cluster]$ rbd resize --pool rbd-data1 --image data-img2 --size 8G

Resizing image: 100% complete...done.

[ceph@deploy ceph-cluster]$ rbd ls -p rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2 excl

data-img2 8 GiB 2

[root@ceph-client2 /]# fdisk -l /dev/rbd0

Disk /dev/rbd0: 8589 MB, 8589934592 bytes, 16777216 sectors

Units = sectors of 1 * 512 = 512 bytes

Sector size (logical/physical): 512 bytes / 512 bytes

I/O size (minimum/optimal): 4194304 bytes / 4194304 bytes

4.4、开机自动挂载

[root@ceph-client2 /]# cat /etc/rc.d/rc.local

rbd --user user1 -p rbd-data1 map data-img2

mount /dev/rbd0 /data/

[root@ceph-client2 /]# chmod a+x /etc/rc.d/rc.local

[root@ceph-client2 ~]# reboot

# 查看映射

[root@ceph-client2 ~]# rbd showmapped

id pool image snap device

0 rbd-data1 data-img2 - /dev/rbd0

# 验证挂载

[root@ceph-client2 ~]# df -TH

Filesystem Type Size Used Avail Use% Mounted on

devtmpfs devtmpfs 2.0G 0 2.0G 0% /dev

tmpfs tmpfs 2.0G 0 2.0G 0% /dev/shm

tmpfs tmpfs 2.0G 13M 2.0G 1% /run

tmpfs tmpfs 2.0G 0 2.0G 0% /sys/fs/cgroup

/dev/mapper/centos-root xfs 19G 2.4G 16G 13% /

/dev/sda1 xfs 1.1G 158M 906M 15% /boot

/dev/rbd0 ext4 5.2G 23M 4.9G 1% /data

tmpfs tmpfs 396M 0 396M 0% /run/user/0

4.5、rbd镜像卸载删除

# 卸载 rbd 镜像

[root@ceph-client2 ~]# umount /data

[root@ceph-client2 ~]# rbd --user user1 -p rbd-data1 unmap data-img2

# 删除 rbd 镜像

# 镜像删除后数据也会被删除而且是无法恢复,因此在执行删除操作的时候要慎重。

[ceph@deploy ceph-cluster]$ rbd rm --pool rbd-data1 --image data-img2

Removing image: 100% complete...done.rbd 镜像回收站机制:

删除的镜像数据无法恢复,但是还有另外一种方法可以先把镜像移动到回收站,后期确认删除的时候再从回收站删除即可。

# 查看镜像状态

[ceph@deploy ceph-cluster]$ rbd status --pool rbd-data1 --image data-img1

Watchers:

watcher=192.168.1.11:0/2873007892 client.5904 cookie=18446462598732840961

# 将进行移动到回收站

[ceph@deploy ceph-cluster]$ rbd trash move --pool rbd-data1 --image data-img1

# 查看回收站的镜像

[ceph@deploy ceph-cluster]$ rbd trash list --pool rbd-data1

15cf6b8b4567 data-img1

# 还原镜像

[ceph@deploy ceph-cluster]$ rbd trash restore --pool rbd-data1 --image data-img1 --image-id 15cf6b8b4567

[ceph@deploy ceph-cluster]$ rbd ls --pool rbd-data1 -l

NAME SIZE PARENT FMT PROT LOCK

data-img1 3 GiB 2

# 从回收站删除镜像

如果镜像不再使用,可以直接使用 trash remove 将其从回收站删除5、镜像快照

客户端当前数据:

[root@ceph-client2 ~]# rbd --user user1 -p rbd-data1 map data-img1

/dev/rbd0

[root@ceph-client2 ~]# rbd showmapped

id pool image snap device

0 rbd-data1 data-img1 - /dev/rbd0

[root@ceph-client2 ~]# mount /dev/rbd0 /data/

[root@ceph-client2 ~]# ll /data/

total 110604

-rw-rw---- 1 polkitd input 56 Jan 9 14:54 auto.cnf

-rw-rw---- 1 polkitd input 12582912 Jan 9 15:27 ibdata1

-rw-rw---- 1 polkitd input 50331648 Jan 9 15:27 ib_logfile0

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile1

drwx------ 2 polkitd input 4096 Jan 9 14:54 mysql

drwx------ 2 polkitd input 4096 Jan 9 14:54 performance_schema

创建并验证快照:

# 创建快照

[ceph@deploy ceph-cluster]$ rbd snap create --pool rbd-data1 --image data-img1 --snap img1-snap01

# 验证快照

[ceph@deploy ceph-cluster]$ rbd snap list --pool rbd-data1 --image data-img1

SNAPID NAME SIZE TIMESTAMP

4 img1-snap01 3 GiB Mon Jan 9 15:35:29 2023 删除数据并还原快照:

# 客户端删除数据

[root@ceph-client2 ~]# ll /data/

total 110604

-rw-rw---- 1 polkitd input 56 Jan 9 14:54 auto.cnf

-rw-rw---- 1 polkitd input 12582912 Jan 9 15:27 ibdata1

-rw-rw---- 1 polkitd input 50331648 Jan 9 15:27 ib_logfile0

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile1

drwx------ 2 polkitd input 4096 Jan 9 14:54 mysql

drwx------ 2 polkitd input 4096 Jan 9 14:54 performance_schema

[root@ceph-client2 ~]# rm -rf /data/auto.cnf

[root@ceph-client2 ~]# ll /data/

total 110600

-rw-rw---- 1 polkitd input 12582912 Jan 9 15:27 ibdata1

-rw-rw---- 1 polkitd input 50331648 Jan 9 15:27 ib_logfile0

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile1

drwx------ 2 polkitd input 4096 Jan 9 14:54 mysql

drwx------ 2 polkitd input 4096 Jan 9 14:54 performance_schema

# 卸载 rbd

[root@ceph-client2 ~]# umount /data

[root@ceph-client2 ~]# rbd --user user1 -p rbd-data1 unmap data-img1

# 回滚快照

[ceph@deploy ceph-cluster]$ rbd snap rollback --pool rbd-data1 --image data-img1 --snap img1-snap01

Rolling back to snapshot: 100% complete...done.

# 客户端验证数据

[root@ceph-client2 ~]# rbd --user user1 -p rbd-data1 map data-img1

/dev/rbd0

[root@ceph-client2 ~]# mount /dev/rbd0 /data/

[root@ceph-client2 ~]# ll /data/

total 110604

-rw-rw---- 1 polkitd input 56 Jan 9 14:54 auto.cnf

-rw-rw---- 1 polkitd input 12582912 Jan 9 15:27 ibdata1

-rw-rw---- 1 polkitd input 50331648 Jan 9 15:27 ib_logfile0

-rw-rw---- 1 polkitd input 50331648 Jan 9 14:54 ib_logfile1

drwx------ 2 polkitd input 4096 Jan 9 14:54 mysql

drwx------ 2 polkitd input 4096 Jan 9 14:54 performance_schema删除快照:

[ceph@deploy ceph-cluster]$ rbd snap remove --pool rbd-data1 --image data-img1 --snap img1-snap01

Removing snap: 100% complete...done.

[ceph@deploy ceph-cluster]$ rbd snap list --pool rbd-data1 --image data-img1

快照数量限制:

# 设置与修改快照数量限制

[ceph@deploy ceph-cluster]$ rbd snap limit set --pool rbd-data1 --image data-img1 --limit 30

# 清除快照数量限制

[ceph@deploy ceph-cluster]$ rbd snap limit clear --pool rbd-data1 --image data-img1